Looking at the entire history of human civilization, “creating humans” can almost be said to be one of the oldest and most enduring dreams of mankind.

From myths and legends around the world, we can see various projections of this dream: whether it is China’s Nvwa or Prometheus in ancient Greece, we always want to create another “ourselves” with our own hands.

This dream runs through thousands of years of artistic and technological development. From puppets, pottery figurines, rag dolls, to modern simulated dolls and virtual digital people, the more advanced the technology, the more specific and complex the path of “creating humans”. When mechanical structures, intelligent algorithms and material technologies develop to a certain stage, creating a truly “human-like” machine life has almost become an inevitable direction of technological evolution.

So, we have come to the era of “humanoid robots”.

How can robots be more “human-like”?

Nowadays, the development direction of humanoid robots is clearly diverging: one path emphasizes “more like a machine”, pursuing execution efficiency, control accuracy and industrial adaptability; the other path insists on “more like a human”, not only looking like a human, but also hoping to build the ability to interact naturally with humans at the level of behavior, language and emotion.

“More like a machine” and “more like a human”

The latter is exactly the direction explored by simulation humanoid robots. They are aimed at exhibition halls, shopping malls, schools, and families, and are especially suitable for scenarios that require “anthropomorphism” such as cultural IP presentation, immersive tours, and emotional care.

Compared with functional robots, simulation robots are more technically difficult. The difficulty lies not only in the complexity of modules, but also in system consistency. When a machine is close to humans in appearance, but there is a sense of disharmony in expression, language or action, it is easy to trigger an instinctive rejection reaction in humans-this is the famous “uncanny valley effect”.

“Uncanny valley effect”: As the similarity between robots and humans in appearance and behavior continues to increase (horizontal axis), people’s affinity for them (vertical axis) will usually increase accordingly. But when the robot becomes too realistic but slightly uncoordinated, people’s affinity will drop sharply.

When facing a highly realistic robot, humans do not simply judge whether it is credible based on “whether the technology is advanced”, but instinctively perceive “whether it is like a real person” based on multimodal signals such as visual details, movement rhythm, and emotional feedback.

Therefore, if a simulated humanoid robot wants to truly cross this psychological threshold, it must achieve coordinated breakthroughs in three core dimensions:

- Appearance simulation

The first step of a simulated robot is to restore its appearance. This is not only about the image, but also the first threshold for users to approach it.

Bionic skin materials: Usually use high-precision silicone, thermoplastic elastomer and other materials, by adjusting skin color, texture, transparency and reflectivity, to simulate the visual and tactile texture of human skin.

Humanoid skeleton structure: Including the size ratio and joint position of the head, torso, limbs and other parts, which need to be as close to human physiological characteristics as possible to support natural posture and smooth movement.

Facial expression drive system: Use micro servos, flexible cables or pneumatic muscle control modules to achieve fine movements of eyes, eyebrows, lips and other parts, and restore basic expressions such as blinking, smiling and frowning.

- Behavior simulation

The simulation should not only look like a human, but also “move like a human”. This requires the robot to have natural, smooth and coordinated movement performance, including the following capabilities:

Multi-degree-of-freedom flexible drive: Control multiple joints through servo motors, flexible actuators or bionic muscle systems to achieve complex movements such as head rotation, arm waving, finger grasping, etc. The driving accuracy and response speed determine the smoothness of the movement.

Motion coordination algorithm: Ensure that the movement logic between joints is reasonable, such as hand and foot coordination when walking, and body fine-tuning when turning the head, so as to avoid “mechanical fracture” or untimely hysteresis reaction.

Posture balance and dynamic control: In the process of standing, sitting, walking, etc., rely on IMU and ground contact sensing system to dynamically adjust the body’s center of gravity, keep the posture stable, and prevent falling or stiffness.

- Interactive simulation

Communication between people is far more than the transmission of language information. It also involves the interaction of multiple layers of signals such as tone, eyes, emotions, and rhythm. Interactive simulation is trying to reconstruct this multimodal process so that human-computer communication is no longer rigid.

Semantic understanding and language generation: Relying on large language models (such as LLM), robots can understand the content, emotions, and contextual intentions in the user’s language, generate responses with clear structure and appropriate intonation, and have a certain “context memory” ability.

Emotional expression and expression linkage: When the robot speaks, it can synchronously match the corresponding facial expressions, such as smiling, frowning, staring, etc. Some systems can also actively generate emotional feedback through semantic content or keyword analysis to achieve “warm response”.

Gaze and attention system: With the help of face recognition, sound source localization and other technologies, the robot can dynamically track the user’s position, adjust the head and eye direction, simulate the “gaze” behavior, and enhance the “sense of being paid attention” and immersion in communication.

Multimodal coordination mechanism: Ensure that language, expression and action are synchronized in time, so as to avoid the discordant moments that destroy the sense of anthropomorphism, such as “mouth moving but eyes not moving” and “smiling and saying angry words”.

These three dimensions are not independent of each other, but are highly coupled and operate in coordination. The closer to humans, the more important the consistency of each link in the system. A highly simulated face will still be regarded as “weird” by users if the voice response is delayed; a natural answer will also be difficult to establish real interactive trust if there is no matching gaze and expression.

Some examples of the “uncanny valley effect”

Therefore, “simulation” has never been a single breakthrough in a certain technology, but a system integration capability across perception, materials, control and semantics. At present, most companies are still in the stage of testing and polishing single-point technologies in various dimensions, and teams that can truly establish a closed loop system in three aspects at the same time are extremely scarce.

Which startups are committed to making robots more “human-like”?

In recent years, more and more companies have begun to get involved in the field of simulated humanoid robots. Although there are no companies that can truly achieve systematic collaboration in the three core dimensions of appearance, behavior and interaction, some start-ups have shown clear technical potential and evolution paths in certain directions.

This article will sort out a group of Chinese start-ups that have been established for no more than five years to observe their exploration and layout in the direction of simulated robots.

AheadForm

Founded in 2024, it is a technology company focusing on the research and development and manufacturing of highly simulated robot faces. It has obtained angel round financing from institutions such as MiraclePlus and Decent Capital. Founded by Hu Yuhang, a Ph.D. from Columbia University in the United States, the company focuses on the design of humanoid AI robot head modules, and is committed to creating a bionic facial system with emotional expression, environmental perception and human-computer interaction capabilities, and is positioned as a high-end robot product with artistic attributes and anthropomorphic features.

AnyWit

Founded in December 2023, it was founded by a doctoral team from the Robotics Laboratory of the School of Computer Science at the University of Science and Technology of China, focusing on the research and development of interactive robot software and hardware systems. The company focuses on embodied intelligence and multimodal perception technology, and has launched a humanoid emotional interaction robot with highly simulated expressions and flexible skin touch, which supports customized implementation and provides diversified product solutions for B-end applications and C-end markets (such as campus cultural and creative industries, child care).

Smart Faces

Founded at the end of 2024, it was founded by Professor Zou Jun of the School of Mechanical Engineering of Zhejiang University and others, relying on the Zhejiang University High-end Equipment Research Institute. The company focuses on the research and development of interactive humanoid robots with human-like expressions, and its core technologies include intelligent robots, speech recognition, natural language processing, etc. It has completed the design of the fourth-generation product and formally finalized it. The product has the ability to display expressions and chat interactions, and can be used in home-based elderly care, medical care and other fields.

Moon Spring Bionic

Founded in 2022, it was founded by the team of Academician Ren Luquan of the Key Laboratory of Engineering Bionics of the Ministry of Education of Jilin University. Co-founder Ren Lei is a tenured professor at the University of Manchester, UK, and a Yangtze River Scholar of the Ministry of Education. Based on the “bionic tension-compression robot” technology system developed by Professor Ren Lei, the company has achieved full-chain self-development of the whole machine structure, key components and power system. The system simulates the coupling relationship between human bones and muscles, constructs a robot structure with rigid-flexible synergy characteristics, and has high mobility, stability and low energy consumption. The development of dexterous hands, robotic arms, and lower limb walking prototypes has been completed.

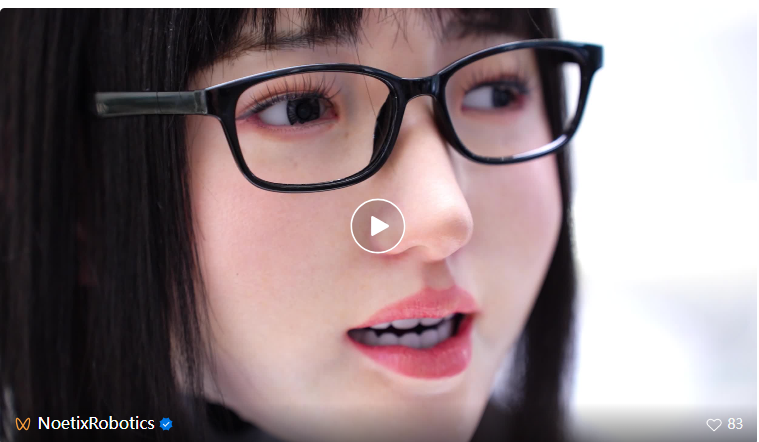

Noetix

The Hobbs bionic humanoid robot launched by Noetix has a bionic facial skeleton with 30 degrees of freedom. The latest robot “Xiao Nuo” adopts the self-developed “micro muscle group” drive system, which consists of 117 micro actuators, each weighing only 3.2 grams, can achieve a precise pulling force of 2.4N, and reproduce the dynamic movement of 42 groups of human facial muscles through bionic layout. In addition, it introduces shape memory alloy as a driving material, which increases the expression response speed to 8 milliseconds, significantly surpassing the performance of traditional servo systems.

Yunmu Intelligent Manufacturing

Founded in 2021, headquartered in Taicang, Jiangsu, the team members come from scientific research backgrounds such as Northwestern Polytechnical University, Shanghai Academy of Spaceflight Technology, and Harbin Institute of Technology. The company focuses on the research and development of embodied intelligent whole machines based on large models, and is one of the first domestic companies with the ability to mass-produce humanoid robots with simulated skin. Its robots have 40 degrees of freedom control of the whole body, bionic arms and facial expression systems, and integrate natural language processing and visual perception capabilities. Representative products include the “Zheng He” bionic robot arranged in the Taicang Museum.

Qingbao Robot

Affiliated to Shanghai Qingbao Engine Robotics Co., Ltd., it was established in 2023 and its founding team came from Tsinghua University. The company focuses on the integration of humanoid robot body and embodied intelligent technology. The technical system covers cloud cognitive systems, flexible actuators, gait control algorithms, micro-expression control and dexterous hands. Its products have been applied to cultural and tourism guided tours, educational demonstrations, industrial displays and other scenarios. Representative customers include Tsinghua University, Ideal Auto, etc.

DroidUp

Founded in 2021, headquartered in Shanghai, it focuses on the development of embodied intelligent robots in the fields of scientific research education, industrial services and public applications. Its “Ruina” robot restores the human face at a 1:1 ratio, has rich expression simulation capabilities and multimodal perception systems, and supports responses and learning based on emotional changes. At the same time, the company also launched the bipedal platform “Walker” series and the small simulation robot “Ulaa”, covering a multi-level product layout from expression systems to whole machine platforms.

Digit

Founded in March 2024, it is committed to promoting the large-scale commercial use of general artificial intelligence robots. Three major product lines have been formed, including the Xiaqi general humanoid robot series, the Xialan humanoid robot series, and the Star Walker IP series robots.

Among them, Xialan S01 is the world’s first mass-produced upright walking simulation human face robot. It uses bionic silicone skin and 26 active degrees of freedom facial control systems. It can simulate 41 facial muscles of humans. Expressions can be generated in real time through algorithms. It can perceive the emotions of the interlocutor and generate empathetic feedback. It supports multimodal interaction and has been applied to government halls, bank outlets, exhibition hall endorsements and other scenes.

The Xiaqi series integrates elements of Chinese history and culture, targets the consumer market, and focuses on scenarios such as children’s education and family companionship; Star Walker is the world’s first dual-form humanoid robot.

Note:

Logistics Automation Development Strategy & the 7th International Mobile Robot Integration Application Conference Southeast Asia will be held in Concorde Hotel Kuala Lumpur, Malaysia on 21st August 2025. Welcome to join us.

For agenda, please click https://cnmra.com/logistics-automation-development-strategy-the-7th-international-mobile-robot-integration-application-conference-southeast-asia-21st-august-2025-concorde-hotel-kuala-lumpur-malaysia/

For registration, please click https://docs.google.com/forms/d/e/1FAIpQLSdGHjpHRU0mR0_2ZlqtJpUV25s3XlIIHtkkUUfxz0W6vpBqiA/viewform?usp=header

探索者论坛-scaled.jpg)